Before we talk about the future of anesthesia, let’s look back at how things change. Change can come slowly or with astonishing suddenness. If you were training or practicing—or know of someone who was—in the early 1980s, the anesthetic recipe might very have involved:

- Thiopental for induction

- Halothane and nitrous oxide for maintenance

- Meperidine, morphine, or fentanyl for analgesia

- Laryngoscope

- Innovar (a fixed combo of fentanyl & droperidol)

- Succinylcholine, curare, or pancuronium for relaxation

- Manually inflated BP cuff, ECG, nerve stimulator (maybe)

- Pneumatic-powered anesthesia machine

- Black rubber breathing circuit and Ohio mask

- Pen and paper charting

While a few items have survived the cut, consider what’s missing from the list, interventions that are now staples of contemporary practice. Today we have sevoflurane, propofol, rocuronium, dexmedetomidine, oscillometric BP, pulse oximeters, infrared agent analyzers for carbon dioxide and our potent inhaled agents, accelerometers, video laryngoscopes, and ultrasound devices. You get the message, change is a constant.

Table of Contents

- Star Wars, Doug Quaid, Tony Stark, and many others!

- Besides being entertaining, what does all that have to do with us?

- Predicting what will (might) happen to our patients

- Important questions to ask with no easy answers

- The past is what you’ve done – the future is what you have learned

The acceleration of technology and knowledge in our specialty continually creates—at an astonishing pace—additive and synergistic applications of innovative devices, new approaches, and how we interact with our patients. Throw in a large dose of human inventiveness, entrepreneurial spirit, industrial competitiveness, open-minded regulators, and what’s happening in popular opinion, what seems like futuristic thinking may already be knocking on our door.

Star Wars, Doug Quaid, Tony Stark, and many others!

The motion picture industry can conjure magical moments that later appear in real life. We love films, and with the theme of visionary technology, consider just a few of our favorites:

- Video calling is displayed in the 1927 film Metropolis.

- One of the “smartest” sci-films of all time, The Day the Earth Stood Still. This 1951 film’s robot, Gort, may be the first true artificial Intelligence (AI) in the history of motion pictures.

- The 1968 film 2001: A Space Odyssey depicted a fully functional tablet and machine learning (ML).

- Dick Tracy’s smart-watch, in 1946, ok, not a film, but we like comic strips too!

- Autonomous cars, 1990, in Total Recall.

- And oh, so many more!!!

Now think of the original Star Wars, where the ever-resourceful R2-D2 creates a hologram of Princess Leia requesting help from Obi-Wan Kenobi. And what about the first Total Recall film, where Doug Quaid (future governor of California Arnold Schwarzenegger!!) uses his watch to project a full-body holographic image of himself into a previously empty space?

Then we have Tony Stark (Robert Downey, Jr.) in Iron Man 2, where holograms are extensively used, providing him with superhuman capacities to invent, analyze, and utilize them as centerpieces in the film.

Besides being entertaining, what does all that have to do with us?

The technology company IKIN has created remarkable 3D holographic devices that project holographic content in color and ambient light in a large format. Their approach allows the human side of the interface to enlarge and manipulate projection elements without the need for goggles. Euclideon, an Australian tech company, uses a similar approach. The RYZ Holographic System™ seeks to optimize and maximize the technology’s applications.

There is no shortage of interest in the field, with companies with intriguing names like Vivid Q, Haloxica, HyperVsn, and many others aggressively developing their approaches. The company Humane, an AI-intense entrepreneurial effort, has patented technologies that use laser projections, three-dimensional cameras, and biosensors. And let’s not forget what Apple, Amazon, Google, Microsoft, NVIDIA, Tesla, and Meta may be up to! Many of these technologies have yet-to-be-defined targets, and they are quietly pursuing applications of these and offspring that may replace the computers, smartphones, and smartwatches we currently use.

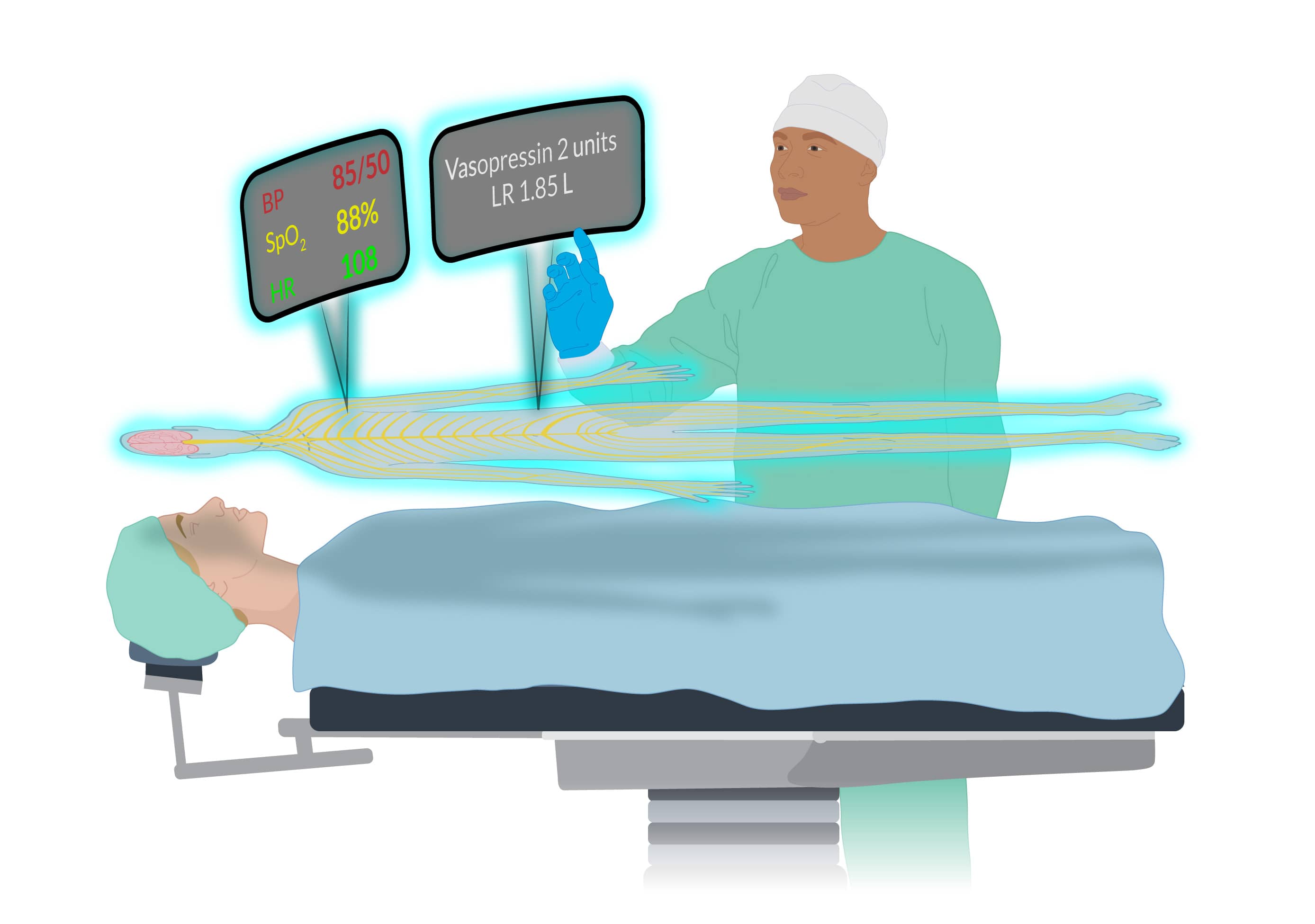

Exactly how this will play out in healthcare, specifically in the CRNAs’ milieu where the display, analysis, and management of physiological data are key to what we do, requires clarification. But what we see in movies, where holographic images and icons hang in the air for protagonists to manipulate—well, that “fantasy” may be a lot closer to our reality than we might think.

Predicting what will (might) happen to our patients

It’s not all speculative science fiction that is shaping our future workplace. In some ways, the future of anesthesia has already arrived with what may best be characterized as a punctuated evolution. Many new initiatives are focusing on innovative monitoring that guides clinical decisions to:

- Maintain or improve brain health

- Avoid failure-to-rescue (FTR)

- Lower the risk of acute kidney injury

- Reduce MINS (myocardial injury after noncardiac surgery)

- Lessen postop opioid use disorder

- Reduce the risk of cardiac arrest

A batch of approaches are in the works or already with us termed medical early warning systems (MEWS) designed to assist us in recognizing warning signs of a deteriorating patient before human identification. The general view is that these will become commonplace throughout our specialty, not only in the OR, but also in the PACU, ICU, and postop hospital wards. MEWS can warn us about the implications of what might only be a subtle, easily overlooked change in a patient’s condition and predict conditions like hypoxemia, hypotension, embolic issues, metabolic disturbances, and neurological insult. This is based on exquisite, real-time monitoring of key physiological parameters with ongoing AI or ML integration.

The Edwards’ Hypotension Prediction Index is an existing system with a research and clinical track record. It uses machine learning to predict intraoperative hypotension before it happens.

This is complicated stuff. It is not just about measuring HR and BP but rather using biosensors to collect and integrate data that might include breathing patterns, cognitive function, genetics, body position, stress level, infection risk, level of consciousness, biomolecules, and other factors limited only by our imagination.

A British Journal of Anaesthesia paper recently demonstrated an AI platform’s keen ability to use intraop data analysis to predict postop hypotension. Another fascinating paper in Nature Reviews Bioengineering, revealed the uncanny ability of an AI tool called ‘Prescience,’ to forewarn of hypoxemia. The key theme in all these technologies is looking beyond the immediate at what may be coming.

Consider that there is a well-recognized 10-30% in-hospital risk of patients experiencing dangerous postop complications. Continuous postop surveillance monitoring systems, aided by AI, can decrease the risk of failure-to-rescue events by predicting which patients might deteriorate before clinical evidence appears. Integrating AI and ML with existing monitoring strategies can detect patterns of subtle, nuanced changes in patient parameters that providers will likely overlook.

Important questions to ask with no easy answers

Let’s finish by asking questions relevant to CRNAs and all healthcare workers. While we do not presume to have all the answers, we hope to get you thinking about some of the implications of new technologies.

What potential does healthcare technology have for patient care?

It will be revolutionary as AI allows us to access, analyze, and apply superhuman amounts of data to diagnostic and healthcare decision-making at speeds, with certainty (errorless), and at lower cost than what a human alone can muster.

What limits are envisioned for healthcare technology?

Besides immediate application to patients, AI, and virtual reality will be increasingly used in teaching, diagnostics, problem-solving, and administrative tasks. That is, they have no limits. Biosensor technology is rapidly growing. Miniaturized autonomous sensors will be self-learning and never need maintenance, as they will be self-calibrating.

What are the challenges that new technologies create?

Data security and integrating new technology with that currently used will pose problems. As many of us have seen with EMRs, its incorporation into the institution’s existing platforms is rarely seamless. Also, who pays for these technologies, and what are their environmental impacts?

The past is what you’ve done – the future of anesthesia is what you have learned

Consider the original anesthetic recipe we presented at the start. In the early 1980s, the risk of dying from anesthesia was 1:10,000; today, it’s almost unmeasurable in ASA 1-3 patients. Advances in technology play an enormous role in enhancing the safety of our work.

Allow us to speculate. In the not-too-distant future, you may voice activate a hologram powered by AI to consult with an expert in real-time as you care for a patient. A synthesis of data gleaned from myriad biosensors displayed in a user-friendly interface will be available to you. With finger or voice activation, you have immediate access to all the world’s published information relevant to the patient you care for.

This all may be closer than you can imagine. Yet that’s just it, as our imagination is the driver behind all of what is coming.

As CRNAs ourselves, we understand the challenge of fitting CRNA continuing education credits into your busy schedule. When you’re ready, we’re here to help.